Dates and Events:

|

OSADL Articles:

2023-11-12 12:00

Open Source License Obligations Checklists even better nowImport the checklists to other tools, create context diffs and merged lists

2022-07-11 12:00

Call for participation in phase #4 of Open Source OPC UA open62541 support projectLetter of Intent fulfills wish list from recent survey

2022-01-13 12:00

Phase #3 of OSADL project on OPC UA PubSub over TSN successfully completedAnother important milestone on the way to interoperable Open Source real-time Ethernet has been reached

2021-02-09 12:00

Open Source OPC UA PubSub over TSN project phase #3 launchedLetter of Intent with call for participation is now available |

How to optimize Linux real-time capabilities?

The OSADL QA farm knows how.

It is a truism that a real-time operating system does not create per se a minimum worst-case latency under every and any condition on whatever hardware. It is, however, rather the overall integration and configuration of hardware, firmware, operating system and applications that define how fast a system reacts. The OSADL QA Farm at osadl.org/QA/, therefore, now contains new sections where the effects of kernel configuration and run-time settings on real-time capabilities are exemplified. Standard real-time data are displayed as long-term latency recordings in idle state and under load conditions (here), and as cyclictest-generated latency plots (here).

In addition, we investigated the effect of single variables on the worst-case latency. The following two examples were obtained on a 4-core Intel Sandy Bridge (i7-2600K @3400 MHz) and on a 6-core AMD (Phenom II X6 1090T) processor that are located in rack #4/slot #6 and rack #1/slot #1, respectively. In both cases, latency plots were obtained by running cyclictest with a cycle interval of 200 µs and a total of 100 million loops resulting in a measurement duration of 5 hours and 33 minutes each.

Effect of CPU frequency scaling (throttling)

In a first recording, the performance scaling governor was selected. This can be done online (if the kernel is configured appropriately) using the short shell script

cd /sys/devices/system/cpu

for i in cpu[0-9]*

do

echo performance >$i/cpufreq/scaling_governor

done

cd -

or defined permanently by configuring the kernel with

CONFIG_CPU_FREQ_DEFAULT_GOV_PERFORMANCE=y

CONFIG_CPU_FREQ_GOV_PERFORMANCE=y

Ondemand CPU frequency scaling was used in a subsequent recording; this was done by echoing ondemand instead of performance to the related governor entry in the /sys virtual file system. As can be seen in the result plot at the right (click here for a full resolution display), there is a significant decrease of the worst-case latency when throttling is disabled by setting the CPU frequency scaling governor to performance. All but one CPU or hyperthread reveal this effect. The amount of the reduction is more or less proportional to the increase of the clock frequency from a minimum of 1600 MHz (ondemand) to always 3400 MHz (performance).

Ondemand CPU frequency scaling was used in a subsequent recording; this was done by echoing ondemand instead of performance to the related governor entry in the /sys virtual file system. As can be seen in the result plot at the right (click here for a full resolution display), there is a significant decrease of the worst-case latency when throttling is disabled by setting the CPU frequency scaling governor to performance. All but one CPU or hyperthread reveal this effect. The amount of the reduction is more or less proportional to the increase of the clock frequency from a minimum of 1600 MHz (ondemand) to always 3400 MHz (performance).

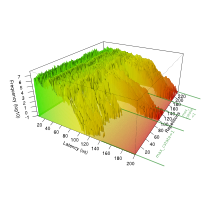

Effect of a single kernel parameter

In another experiment, various methods were investigated to prevent a modern energy-saving processor from entering a sleep state. Although energy-saving is very important to save our planet, it adversely affects the real-time capability. Obviously, allowing the processor to go to sleep in idle state may prevent a timely response to an asynchronous external event. Thus, we cannot have both a low power consumption and a low worst-case latency at the same time. We can only hope that such smart processors running sleeplessly at full speed are heavily used in solar and wind energy generation plants to fully compensate for the (ecologically incorrect) disabling of energy-saving.

The display on the left (click here for a full resolution display) shows combined latency plots during a period of 112 days with two measurements daily. The time scale goes from back to front, i.e. the most recent measurement is the first one. The kernel command line parameter

The display on the left (click here for a full resolution display) shows combined latency plots during a period of 112 days with two measurements daily. The time scale goes from back to front, i.e. the most recent measurement is the first one. The kernel command line parameter

processor.max_cstate=1

was specified during two measurement periods and removed in the remaining time. As can be seen clearly, without this parameter, the processor is allowed to enter sleep states higher than 1 which results in an increase of about 100 µs of the worst-case latency. Obviously, under worst-case conditions, the processor needs about that long to wakeup from sleep and go back to work.